Let's say that we want to process a bunch of values. The operations that we'll be performing are fairly intensive (and independent for each value), so we'd like to do this in parallel.

We could use DispatchQueue.concurrentPerform for this, with the index of each iteration acting as the value that we want to operate on.

var results: [Int: Data] = [:]

DispatchQueue.concurrentPerform(iterations: 100) { value in

// `value` will be in `0..<100`.

let result = expensiveComputation(value)

results[index] = result // Modifying results concurrently 🤔

}

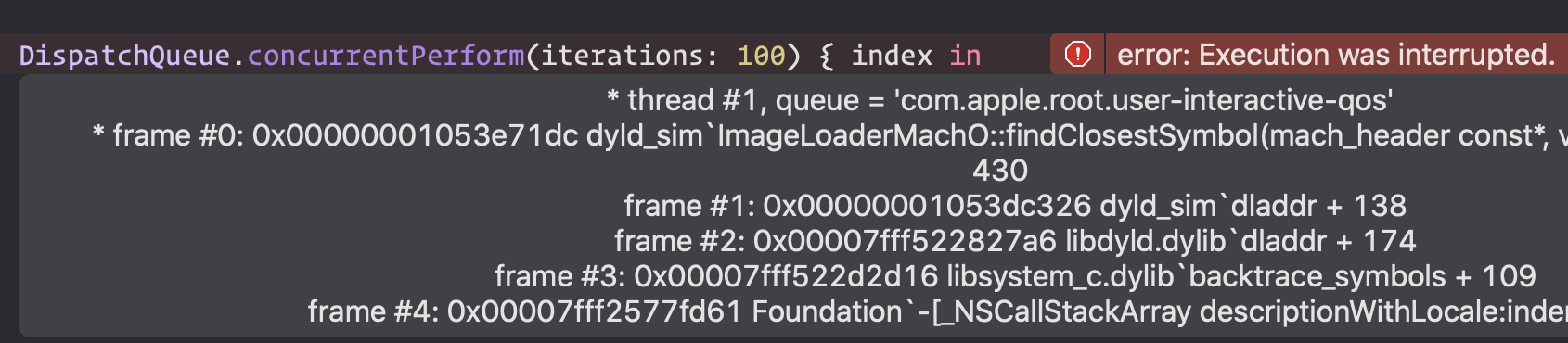

If you try running this first attempt in a playground, you'll get a nasty crash.

That's because we're modifying results from multiple threads concurrently, and Dictionary (like all standard library collections) is not thread safe.

DispatchQueue

We could use a serial DispatchQueue to prevent updating results concurrently.

var results: [Int: Data] = [:]

let queue = DispatchQueue(label: "xyz.jackmorris.results")

DispatchQueue.concurrentPerform(iterations: 100) { value in

let result = expensiveComputation(value)

queue.sync {

// Only one thread will execute here at any time.

results[value] = result

}

}

By only ever updating results inside a queue.sync call, we can be sure that only one thread will update results at any time. If a thread finishes its expensive computation, but another is already updating results, then it will block until the queue is ready.

Here, we're using a DispatchQueue to implement mutual exclusion. We could encapsulate this functionality in our own Mutex type, which hides the fact that we're using Dispatch under the hood.

public final class Mutex {

private let queue = DispatchQueue(

label: "xyz.jackmorris.mutex")

public func with<R>(_ body: () throws -> R) rethrows -> R {

return try queue.sync { try body() }

}

}

var results: [Int: Data] = [:]

let mutex = Mutex()

DispatchQueue.concurrentPerform(iterations: 100) { value in

let result = expensiveComputation(value)

mutex.with { results[value] = result }

}

But! There is one subtle gotcha with using a DispatchQueue in this way: it isn't reentrant. This means that if we're currently holding our mutex (operating inside a .with call), and we attempt to hold it again (with another call to .with), we will end up in a deadlock.

When might this happen? Can't we just make sure that we never call .with multiple times? Sometimes it's not easy to keep track, especially if we have multiple functions that want to execute when holding a Mutex, and there's the possibility of calling one function from another.

struct User {

var age: Int

// ... some other user-related properties.

}

var user = User(age: 24)

let mutex = Mutex() // Used to guard access to `user`.

func updateUser() {

mutex.with {

// ... do some stuff with `user` ...

incrementAge()

}

}

func incrementAge() {

mutex.with {

// 🚨 We may be recursively holding `mutex`!

// Could this deadlock?

user.age += 1

}

}

Reentrancy

A modification to our Mutex will make it reentrant. Rather than using a DispatchQueue, we can use objc_sync_enter and objc_sync_exit, two primitives that effectively power @synchronized in Objective-C.

final public class Mutex {

@inlinable

public func with<R>(_ body: () throws -> R) rethrows -> R {

objc_sync_enter(self)

defer { objc_sync_exit(self) }

return try body()

}

}

The argument passed to objc_sync_enter/objc_sync_exit must be the same for each call, and refers to the object that is used to "lock" against. The Mutex instance itself is perfect for this.

This Mutex is now completely safe to hold, recursively, as many times as required.

let mutex = Mutex()

mutex.with {

mutex.with {

mutex.with {

// You're unlikely to do this, but it's deadlock free 😎

}

}

}

Notice also that we've marked .with as @inlinable. This gives the compiler the chance to inline our implementation across module boundaries, otherwise the cost of passing through the closure may have slight performance implications. If you're building your app in a single module (with whole module optimization enabled) this won't give you any benefit, but it's useful if you're splitting up your binary into frameworks.